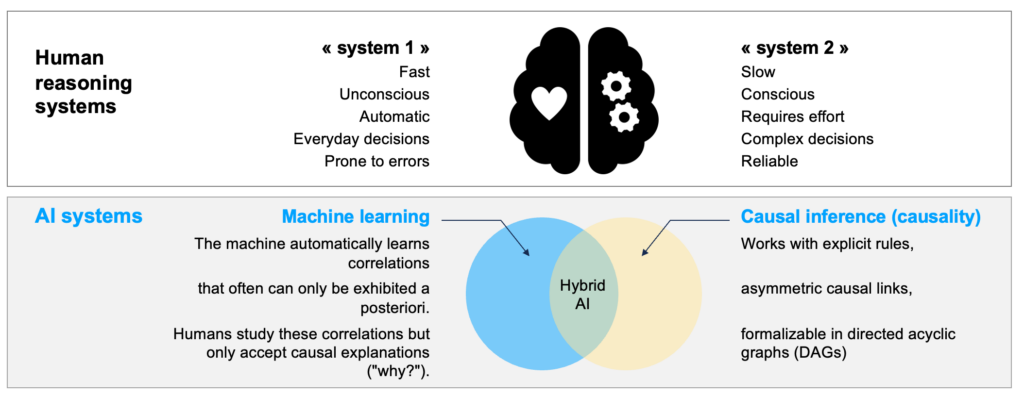

In 2011, economist Daniel Kahneman published « Thinking, Fast and Slow, » a book in which he observed that humans use two systems of reasoning, one fast and intuitive (« System 1 »), the other slow and logical (« System 2 »).

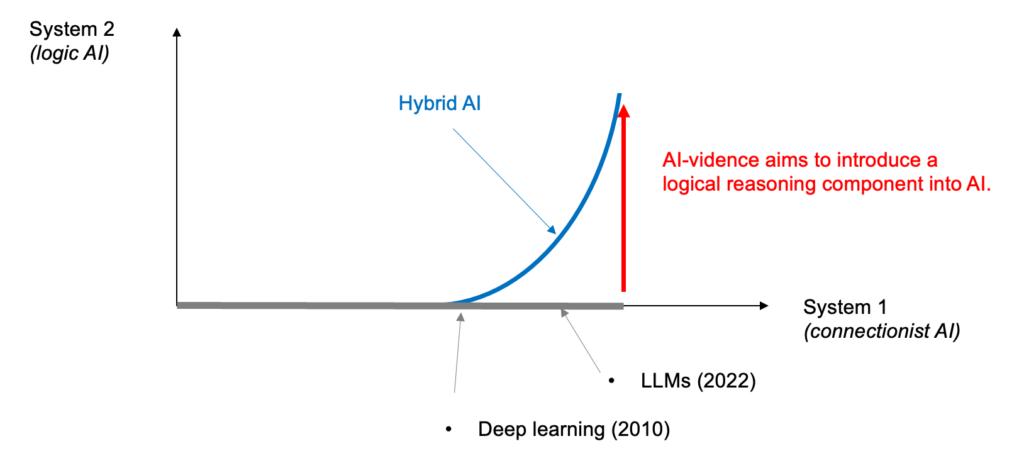

Like many others, this book inspired us. We believe that this principle of two systems can be applied to machines as well. Machine learning is akin to System 1, enabling the « intuitive » perception and recognition of patterns. System 2, which is largely absent in current AI systems, would resemble logical AI, based on rules.

The rise of AI in recent years has relied solely on connectionist AI, which falls under System 2. Humans are unable to understand how an LLM (Large Language Model) operates and cannot trust the content it generates. Therefore, we believe it is necessary to introduce a logical component into future AI systems to restore trust and provide assurances.

The hybridization of AI thus consists of rebalancing the functioning of machines by reintroducing logic.

This is a long-term goal: the path is long before introducing a significant part of logical AI into the systems we know today. But it is the only path we believe in to achieve a truly trustworthy AI.

Hybridizing AI involves gaining a deeper understanding of phenomena for their better modeling.

Our R&D department is conducting several parallel works to enhance this understanding, better model phenomena, and increase the robustness and ethics of our models. See our perspective on trustworthy AI and our approach to characterize it.

Below, we briefly outline our research areas.

Causality allows for the identification of causal links between variables. This helps reduce the number of variables needed for prediction and consequently reduces the complexity of a model. In the case of sensitive variables, identifying a graph explaining the relationships between variables is particularly useful.

AI-vidence has developed Causalink, its first Python library for discovering causal links within a variable graph. We believe that such a representation in graph and causal links is extremely relevant for capitalizing on the expertise of business experts and enabling them to constructively apply their « common sense. » By relying on a causal approach, data scientists and business experts can confidently infer models and use them to substitute a complex model.

After exploration and critique by Causalink, comes the phase of modeling and prediction. We have also developed a predictive engine adapted to this formalization, Causalgo.

Several of our approaches fall within the realm of topology.

Firstly, the concept of regionalization, which is dear to us and gave rise to regional explanation (read our article). The idea is that a phenomenon cannot be adequately understood at a micro (local) or macro (global) level alone. Only an intermediate level of analysis allows us to capture its essence. Moreover, beyond the relevant modeling that can subsequently be done in each of these regions, the division into regions itself carries meaning. Conversely, we cannot decompose a phenomenon into simple parts if we do not understand it.

A regionalized, « multi-scale » approach is particularly necessary for analyzing the functioning of LLMs, language models. It is known that these models encode representations of the elements they manipulate in a so-called latent space. Thus, in the case of natural language processing, LLMs hierarchically manipulate representations of tokens, words, phrases, ideas, concepts, reasoning, etc. See our article on topic modeling.

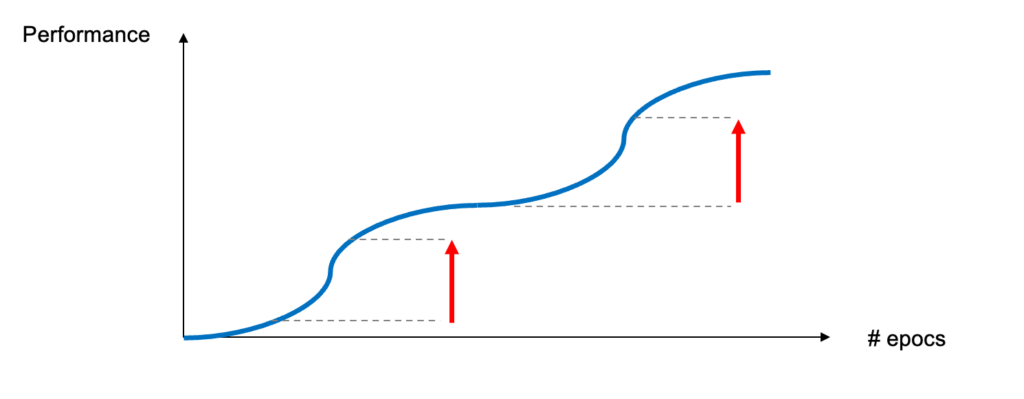

Another application of topology is that the performance of a neural network does not increase linearly with the number of model parameters, nor with the number of « epochs » of training:

On the contrary, the model progresses in fits and starts. It experiences several « clicks » successively. For example, as a language model becomes more complex, it becomes increasingly capable of « learning » and gradually « understanding » more complex phenomena (emphasis, punctuation, interrogation, etc.).

Similarly, during training, with each new understanding, we observe a performance leap linked to « clicks ». Most often, these clicks correspond to a local configuration of the model’s parameter space. It is then conceivable to substitute for this subset of parameters a simpler and perfectly explainable logical AI module.

These topological approaches aim to constitute an aggregated, simplified layer that enables human understanding of the model’s prediction logic.

Computer vision, for example, employs deep neural networks. They themselves illustrate the concept of multiscale segmentation: the initial convolutional layers of the network detect simple graphic primitives (such as the pointed shape of cat ears) and then assemble them to recognize more complex compositions (a face, a body…).

Our idea is to leverage such perception tools to classify components of a scene. The rest of the intelligence could be entrusted to a more frugal and partially logical AI model.

This amounts to hybridizing an AI model, transitioning from a logic of monolithic AI model to an AI system: delegating perception tasks to statistical models (neural networks) that offer significant performance gains; but adding to them a decision-making system, a central orchestrator, which must rely on robust and understandable techniques, characteristic of logical AI.